Your dashboard is lying to you (here's why) : How Leading Indicators Transform Your Value Stream

Why Your Financials Tell You What Happened—Not What's Coming

Last quarter, a CEO spent 3 hours dissecting revenue charts that were already 90 days old. Meanwhile, their deployment cycle time had doubled, technical debt was choking innovation, and customer health scores were silently plummeting.

The diagnosis? A pristine rearview mirror... and a completely foggy windshield.

Here’s the uncomfortable truth: most organizations are still confusing reporting with understanding their value stream.

Why Your Financials Tell You What Happened—Not What’s Coming

In most boardrooms, we’re still obsessing over closed numbers—revenue, EBITDA, churn—when there’s virtually nothing left to change about that cycle. We’re analyzing the ship’s wake while the reefs hide in the next links of the flow.

Classic metrics are comforting because they seem indisputable: audited, stable, aligned with P&L. They tell a clean story... after the fact.

But when a quarter deteriorates, the value stream links that broke were often fragile for months:

Technical debt accumulated on critical systems

Deployment frequency slowing down

Teams burning out on bottleneck work

Progressive misalignment between product and actual usage

Looking only at output metrics is like watching the flow at the end of the chain without seeing the inventory, blockages, and rework loops upstream. You end up suffering domino effects you never saw building.

The shift happens when you accept looking at the entire value stream end-to-end, supported by leading indicators—early signals that connect what teams do today to effects that will propagate tomorrow throughout the flow.

What Actually Makes a Metric “Leading” (and Why Most Aren’t)

We call many things “leading” that really aren’t. To truly transform a value stream, an upstream indicator must meet simple criteria:

✅ Observable early: It moves well before output indicators

✅ Frequent: Tracked at least weekly, often continuously

✅ Causal: Tied to a key flow link via cause → effect relationship

✅ Actionable: A specific team can influence it quickly

✅ Readable: Summarizable in one sentence for any decision-maker

Real-world examples across the value stream:

Go-to-market: Qualification rate evolution between early pipeline stages, core target account share in created opportunities—signals about commercial upstream quality rather than closed deals.

Product: Time-to-value for new users, North Star feature usage depth, 7/14/28-day retention—signals about the solidity of customer “aha moments.”

Delivery/tech: Deployment frequency, cycle time from commit to production, mean time to restore—signals about the software factory’s capacity to regularly irrigate the entire value stream.

People: Team satisfaction, unplanned/planned work ratio, team stability on critical domains—signals about the health of human links in the chain.

These indicators lack P&L precision but offer something essential: the ability to act where the chain weakens, before results break.

Lean Engineering: Making Problems Visible Before They Become Disasters

Lean Engineering, inspired by the Toyota Production System, isn’t about “doing more with less.” Its real ambition is to make problems that matter visible very early, tackle them as they emerge, and treat them at the root—where they occur in the value stream.

In this logic, leading indicators become the company’s nervous system sensors:

Flow: Work In Progress levels, WIP age, flow efficiency. When these signals degrade, they announce exploding wait times, swelling intermediate inventory, and pressure waves propagating between departments.

Quality at source: Proportion of work done right the first time, incident handling time, share of defects detected near origin rather than at chain end. Declining upstream quality mechanically prepares returns, rework, hidden costs, and disappointed customers.

Learning: Problems actually closed each week (identified, analyzed, treated, stabilized), delay between observing dysfunction and deciding on action. The shorter these learning loops, the more robust the value stream becomes.

A story that illustrates this:

With my teams, we tracked a beautifully designed chart of production incident lead time each week. Visually impeccable. Practically useless for decisions:

We saw individual incidents more or less long

But couldn’t tell if the overall situation improved or degraded

Or where to focus efforts

The day we complemented this view with:

Open incident inventory

Incoming and outgoing incidents each week

Median resolution time rather than average

The picture completely changed. We went from decorative curve to genuine leading indicator on incident handling value stream health: we could see if inventory was emptying or filling, if capacity matched demand, and if “normal” incidents resolved faster or slower.

Same technical data, but suddenly a visible value stream.

How Data & AI Change the Game

Technology doesn’t just change data collection speed; it changes how we see the value stream.

Advanced analytics and AI enable:

Combining weak signals (navigation, support, product usage, market context) to build scores anticipating churn or contract expansion at critical chain points

Identifying patterns humans wouldn’t imagine—specific customer action sequences systematically preceding upgrades or departures

Moving from purely descriptive dashboards to indicators directly suggesting intervention points in the flow

Three digital projects illustrate this shift:

Walmart (Distribution): Used near-real-time analytics to cross-reference checkout data, search signals, and web behavior. Predictive inventory indicators at store level enabled reaction before stockouts on certain logistics links, particularly for highly volatile seasonal products. The leading signal sat at local demand level; impact showed later in sales per square foot.

Retail banking: Some banks now track “digital adoption rate” and cloud migration as upstream indicators of service cost and volume absorption capacity. As long as these signals progress, friction points in the processing chain reduce and unit costs stay controlled despite growth.

Starbucks (Physical retail augmented): With its “digital flywheel,” relied on a simple leading indicator: mobile ordering adoption rate and loyalty program member activity. By reinforcing these digital uses at key journey moments, the chain smoothed in-store throughput and secured recurring value flow, well before annual accounts illustrated it.

In all cases, AI and data aren’t window dressing: they enable mapping the value stream differently, revealing bottlenecks, levers, and opportunities that classic metrics don’t show.

Connecting Leading Indicators to Financial Language (Without Losing the Value Stream)

A CEO doesn’t buy continuous deployment charts or developer satisfaction indices; they buy cash flow, reduced risk, and sustainable value from a mastered value stream.

Connecting leading indicators to this grammar is essential:

Cycle time corresponds, on the digital side, to inventory turnover speed in manufacturing. In a factory, sleeping stock immobilizes cash; in software, undelivered backlog immobilizes cash as salaries. Reducing this delay improves product budget internal return and accelerates value rotation in the chain.

Technical debt is hidden liability: the higher the maintenance/new features ratio climbs, the more the organization pays for past choices. A leading indicator like unplanned work ratio visualizes this liability well before market-perceived innovation decline, anticipating where the value stream risks freezing.

Reliability metrics (change failure rate, mean time to restore) proxy reputation risk and value stream interruption as seen by customers.

When you make this link explicit, leading indicators stop being a “tech” or “method” topic: they become a tool to reason about economics, risks, and capital allocation along the value stream.

The “Iceberg” Dashboard: Reassuring While Transforming

The effective balance point resembles an iceberg dashboard centered on the value stream:

Above the waterline: Output indicators—revenue, margin, churn, NPS, cash. They describe how value materialized at chain output.

Below the surface: Leading indicators illuminating what happens inside:

Digital capabilities (deployment frequency, cycle time, technical debt ratio)

Customer signals (North Star feature usage, digital channel adoption, health scores on key segments)

Human signals (team satisfaction, stability on critical domains, learning intensity)

The essential part is explicit links between these two levels:

“Revenue is stable this quarter, but our cycle time is up 15% and unplanned work exceeds 35%. If we don’t invest now in automation and debt reduction, we’re accepting that the value stream will seize up within two quarters.”

An indicator that triggers no decision remains, for me, expensive noise. A relevant leading indicator is a signal that illuminates a concrete value stream link and triggers both discussion and arbitrage.

The Bottom Line

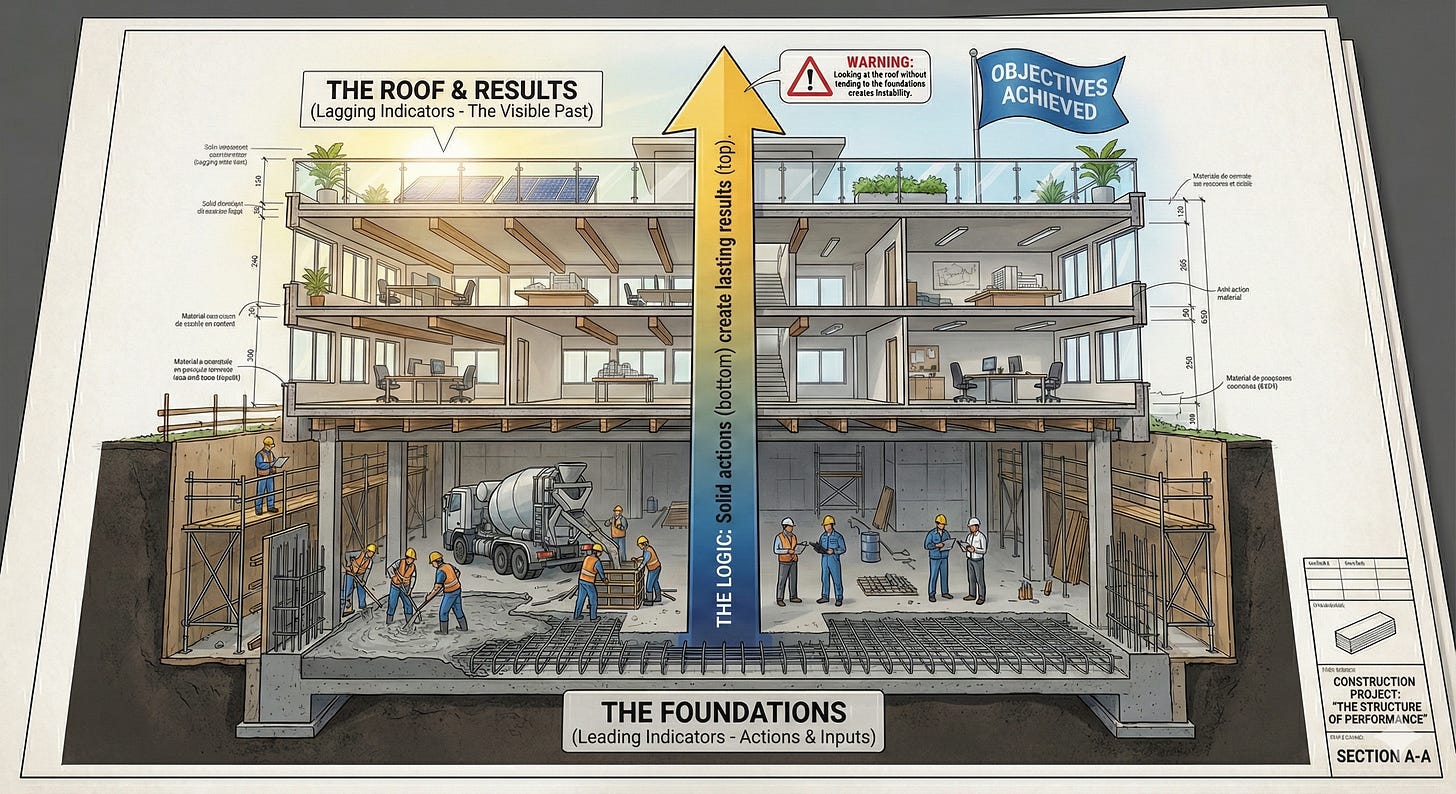

Moving from output indicators alone to leading indicators isn’t a reporting refinement. It’s a structural choice: looking at the value stream in its dynamics, instead of only commenting on its extremities.

An organization that maps its flows, relies on Lean Engineering to see the right problems early, enriches its signals through digital and AI, then connects these indicators to its investment arbitrages, becomes profoundly anticipatory: it sees risks while still solvable and captures opportunities before they close.

You don’t build sustainable advantage with just a perfect rearview mirror. You build it by installing, at the company’s heart, a radar that illuminates the value stream, link by link—and having the discipline to act on these signals.

💬 YOUR TURN: Which leading indicator would change everything in your organization if you started tracking it this week? Hit reply and tell me—I read every response.